Think about the last time you asked an AI assistant to help you find a discount. Or the last time you trusted a browser extension to apply a promo code at checkout. What happened when it actually tried to use that code?

If you're like most people, the code failed. Then it tried another. Failed again. By the fifth attempt, you'd either given up or gotten locked out of the checkout entirely.

This isn't a fluke. It's the default state of AI-assisted shopping.

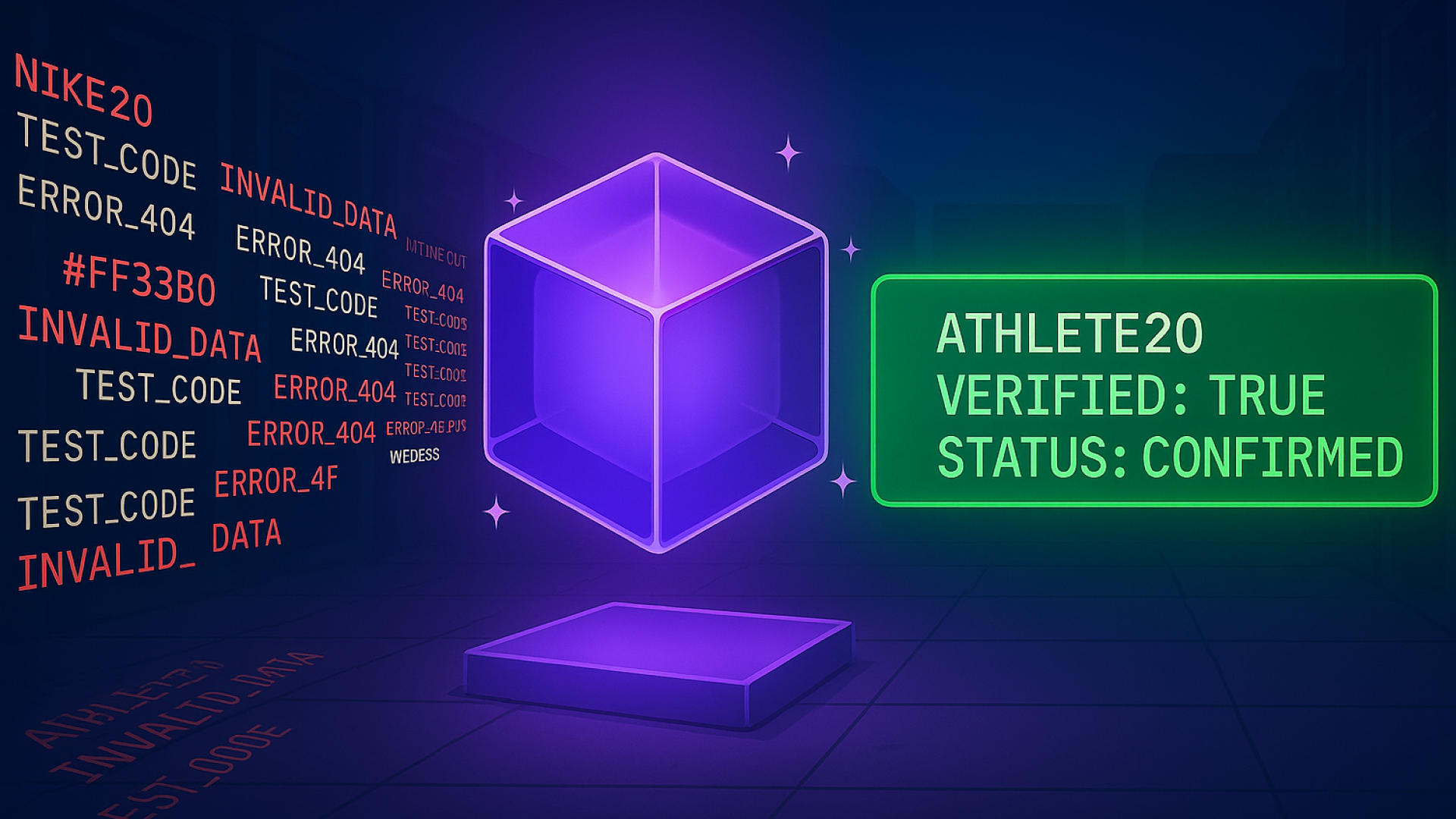

AI agents are hallucinating at checkout. When GPT-4 or Claude encounters a promo code field, they make probabilistic predictions based on training data contaminated by years of SEO spam. They output NIKE20 or SAVE15 because these strings appeared 10,000 times in their training corpus - not because they're valid today.

The result: agents behave like credential-stuffing botnets. They hammer merchant checkout endpoints with invalid codes, trigger WAF (Web Application Firewall) blocks, and poison IP reputation. A shopping assistant that worked yesterday suddenly can't access half the stores on the internet.

This isn't just a consumer UX problem. This is an infrastructure problem. AI agents need deterministic, verifiable truth at the transaction layer. They need a stop condition. They need proof that a code actually works - or proof that no codes exist so they can stop searching.

SimplyCodes isn't a coupon site. We're a Probabilistic Truth Refinery - the verification infrastructure layer that makes agentic commerce possible.

This is how we built it.

I. The architecture: Byzantine Fault Tolerance for commerce

When you search for a promo code online, you're trusting that someone actually tested it. Most sites didn't. They scraped text strings from competitors, generated plausible-looking codes with AI, or simply made them up. The economics reward volume over truth.

We had to solve a harder problem: How do you manufacture truth when you can't trust any single source?

This is the Byzantine Generals Problem from distributed computing. Multiple parties need to reach consensus, but some parties are adversarial or unreliable. Traditional crowdsourcing fails here because it assumes honest actors. The coupon ecosystem assumes the opposite.

We built a three-layer Byzantine Fault Tolerant (BFT) architecture where truth emerges from adversarial consensus.

Layer 1: The blindness protocol (eliminating bias)

Our verification system enforces double-blind assignment. When a validator tests a code, they don't know:

Source origin (merchant email, user submission, scraped competitor)

Current database status (trending, previously verified, flagged)

Other validators' votes

Merchant identity until execution moment

This is Entropy Injection. By randomizing the verification pool and hiding state, we prevent informational cascades - the herding behavior where validators unconsciously align with perceived consensus.

The math: Condorcet's Jury Theorem proves that independent votes converge on truth when error rates are uncorrelated. Traditional systems violate this by showing validators the "current score." We maintain independence.

The attack it prevents: Sybil resistance. An attacker can't bribe a specific validator because they can't guarantee assignment. The cost of attack scales asymptotically with network size.

Layer 2: The staking protocol (economic alignment)

Every validator has a Reputation Score - a numerical asset functioning as collateral. When they verify a code, they're staking their accumulated reputation on the outcome.

The mechanism is straightforward: accurate verifications increase reputation and earning power, while inaccurate verifications decrease both. Low-reputation validators lose access to high-paying tasks, visibility, and bonus pools. This creates what we call the Nash Equilibrium of Honesty - accuracy becomes the dominant strategy.

Compare this to affiliate networks where the dominant strategy is lying. In the Failure Economy, sites generate fake codes for $0.06-0.15/page and earn $8 in affiliate commissions regardless of validity. That's a +275% margin. Truth costs $6.40-$21.75/page in verification infrastructure and returns $0 when no codes exist. That's a -400% margin.

We inverted the incentive function. In our system, lying has negative ROI because reputation destruction costs more than the one-time payment gain.

The insight: We replaced capital staking (ETH in blockchain) with reputation staking. Building reputation requires time and accurate work (Proof-of-Work). Destroying it for a $0.10 fake code has negative ROI.

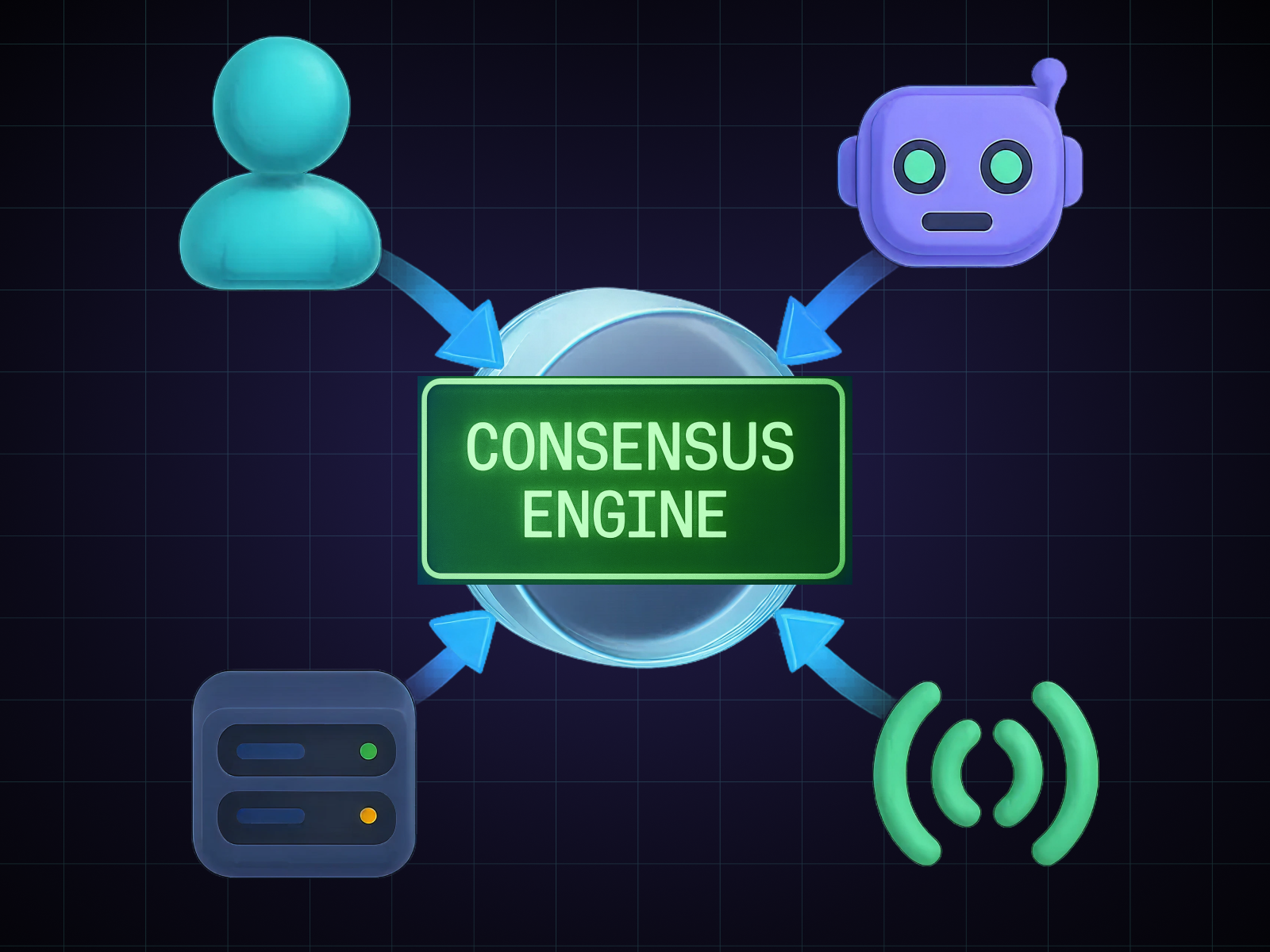

Layer 3: The consensus engine (multi-node agreement)

No code achieves "Verified" status without multi-layer consensus:

Node Type 1: Human Validators (3-7 per code)

Low throughput, high context

Provide Proof of Execution (screenshots, checkout completion)

Resolve semantic gaps AI misses (restricted items, conditional logic)

Weighted by reputation tier (Diamond validators carry significantly more weight than new validators)

Node Type 2: AI Agents (ACT/SVS systems)

High throughput, low trust

Process 1M+ signals to identify candidates

Function as Filter, not Oracle

Flag probabilistic matches for human verification

Examples: Ron (newsletter scanner), SVS (synthetic cart tester)

Node Type 3: Merchant API (when available)

Direct validation endpoint queries

Highest trust weight

Real-time state checks

Examples: Shopify validation API, custom merchant integrations

Node Type 4: User Feedback (post-publication)

Crowd validation at scale

Triggers re-verification when consensus breaks

Weighted by user history and account age

The consensus approach: We use weighted averaging where each vote's influence is determined by the node's reputation and type. Merchant API votes carry the highest weight, followed by trusted human validators, then AI systems, then anonymous user feedback. This creates resilience to bot flooding - coordinated spam from low-reputation sources can't override consensus from trusted validators.

The topology: This creates a "Closed Trust Loop." AI prevents human overload (filters 1M signals down to 1K candidates). Humans prevent AI hallucination (test ground truth). Protocol prevents coordination attacks (penalizes reputation for lying).

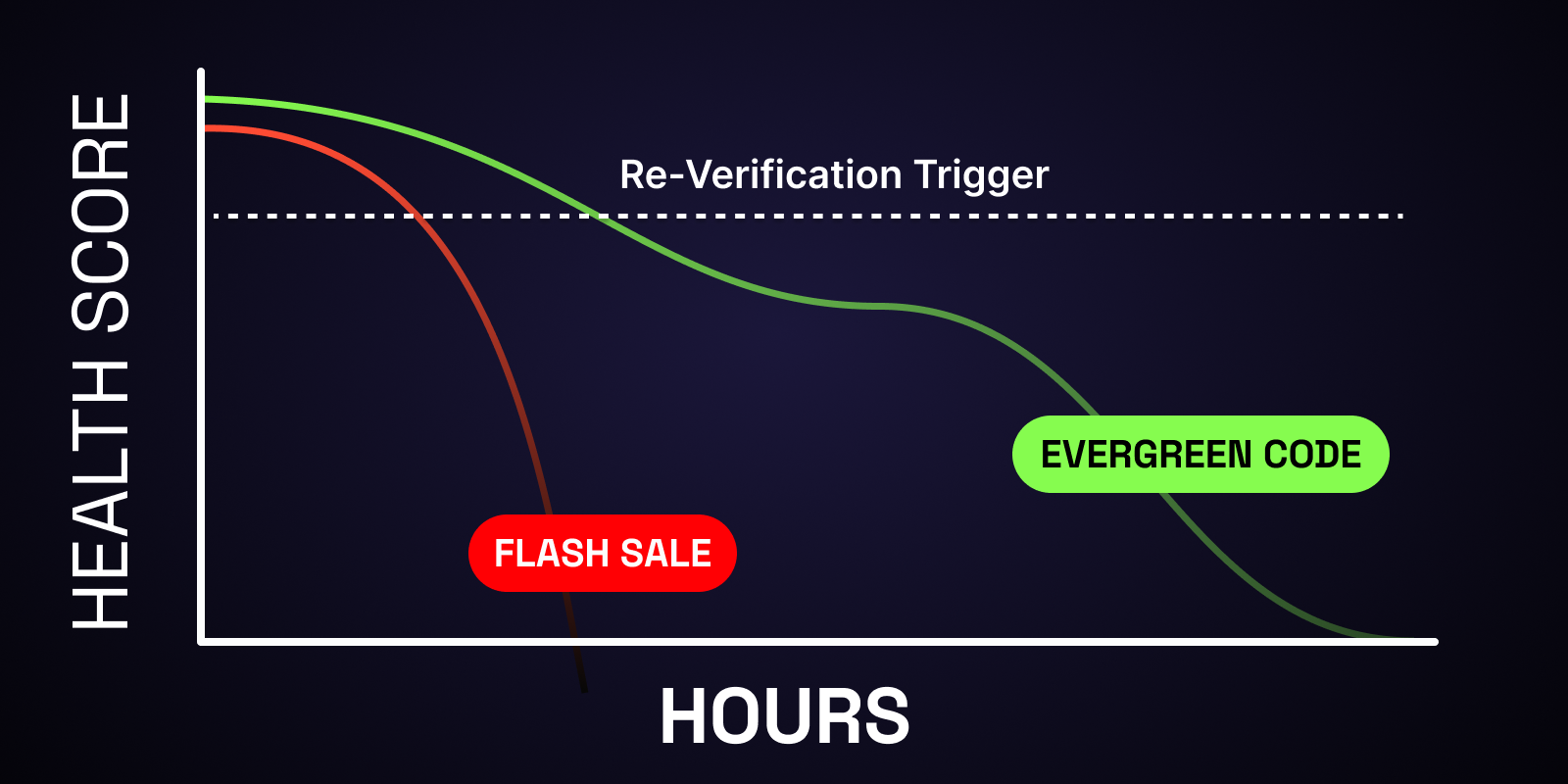

II. The state decay function: The half-life of truth

A code that worked this morning might be dead by lunch. Flash sales end. Usage caps hit. Merchants pull promotions early without warning. That "verified" code from last week? Useless.

Coupon validity isn't binary. It's probabilistic and temporal. A verification is a point-in-time snapshot. As time progresses, confidence naturally decreases.

We model this as exponential decay. Each code has a Health Score (0-100) that represents our current confidence in its validity. This score decreases over time at a rate calibrated to the merchant's historical volatility.

High-volatility merchants (flash sales, holiday promotions) see faster decay - we might lose confidence within hours. Stable merchants with evergreen promotions decay much more slowly - confidence might hold for days. The decay rate is learned from historical patterns: how often does this merchant change codes? How long do their promotions typically last?

When a code's Health Score drops below our confidence threshold, the system automatically triggers re-verification. This solves the "stale data" problem inherent to scraping.

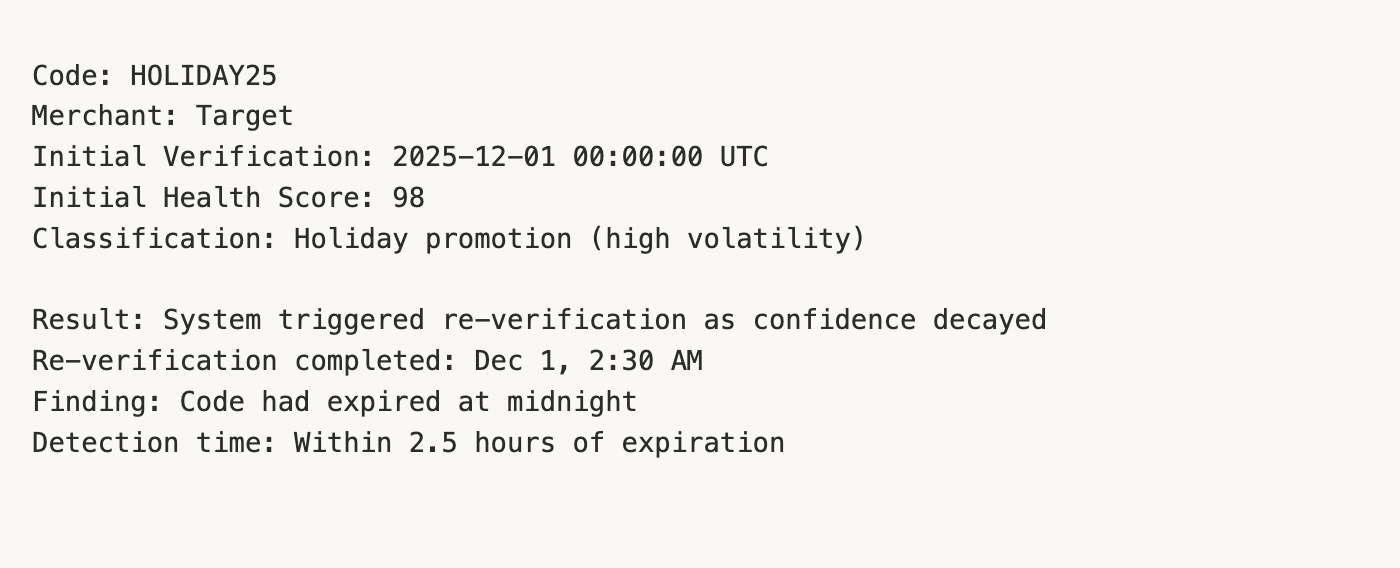

Example:

This example shows how our decay model caught a holiday code expiration within hours, not days. The system knew to check more frequently because holiday promotions are volatile.

Why this matters for AI agents: A static verification from 2 weeks ago is worthless. Our system provides temporal confidence. Agents can query: "What's the probability this code works right now?" We return both the current score and metadata about how quickly confidence is decaying.

III. The transaction integrity protocol: AI safety infrastructure

Imagine you ask an AI shopping assistant to find you a promo code. It searches the web, finds "NIKE20" listed on 47 coupon sites, and confidently tells you it works. You try it. Invalid. The AI tries five more. All invalid. By the sixth attempt, you're locked out of checkout entirely.

This is happening at scale. And it's about to get much worse.

This is where SimplyCodes becomes critical infrastructure for agentic commerce.

The hallucination vector

When AI agents encounter promo code fields, they engage in "probabilistic execution" - iteratively POSTing statistically plausible strings to /checkout/apply-coupon endpoints.

To defensive heuristics (WAFs), this behavior is indistinguishable from credential stuffing. The digital exhaust includes:

High-velocity 4xx error rates (400 Bad Request, 422 Unprocessable)

Anomalous navigation patterns (bot-like speed, no mouse movement)

Repeated form submission attempts from single IP

Missing browser fingerprints (TLS signatures, canvas hashes)

This triggers targeted protection rules. AWS WAF uses TGT_ML_CoordinatedActivityMedium and TGT_ML_CoordinatedActivityHigh rule groups. Cloudflare uses Bot Score thresholds (<30 = definite bot, 30-50 = likely bot). Both result in immediate IP blocking.

If the agent operates from shared egress IPs (OpenAI's server farm), this creates IP Reputation Poisoning - systematically degrading model reliability across the merchant network.

Measured impact:

Agent attempting 10+ invalid codes: triggers WAF blocking within 60 seconds

Shared IP reputation degradation: increase in CAPTCHA challenges for all subsequent requests from that IP

Recovery time: hours to days for IP reputation reset

The sanitization layer

SimplyCodes functions as a Deterministic Middleware Oracle - a pre-checkout firewall sitting between the Probabilistic Agent and the Deterministic Merchant.

The architecture is straightforward: the agent queries our API before attempting any code on the merchant site. Our verification engine evaluates the code against our consensus data. If the code meets our confidence threshold, we return it with full constraint metadata. If it doesn't meet the threshold, we return a structured failure response explaining why - the code expired, the cart is below minimum, the user's location is restricted, whatever the specific reason.

This is Circuit Breaker Logic. If a code doesn't meet strict confidence threshold, the system logically pre-empts the agent from attempting it on the merchant site. The agent never sends a bad request.

The result: The agent moves from "Generative" (guessing) to "Symbolic" (executing). Zero 4xx errors against merchant infrastructure. The agent's non-human nature becomes invisible to WAF heuristics.

Performance metrics:

Traditional AI shopping agent: 6-12 invalid code attempts per checkout

SimplyCodes-integrated agent: 0-1 invalid code attempts per checkout (only when merchant changes code mid-session)

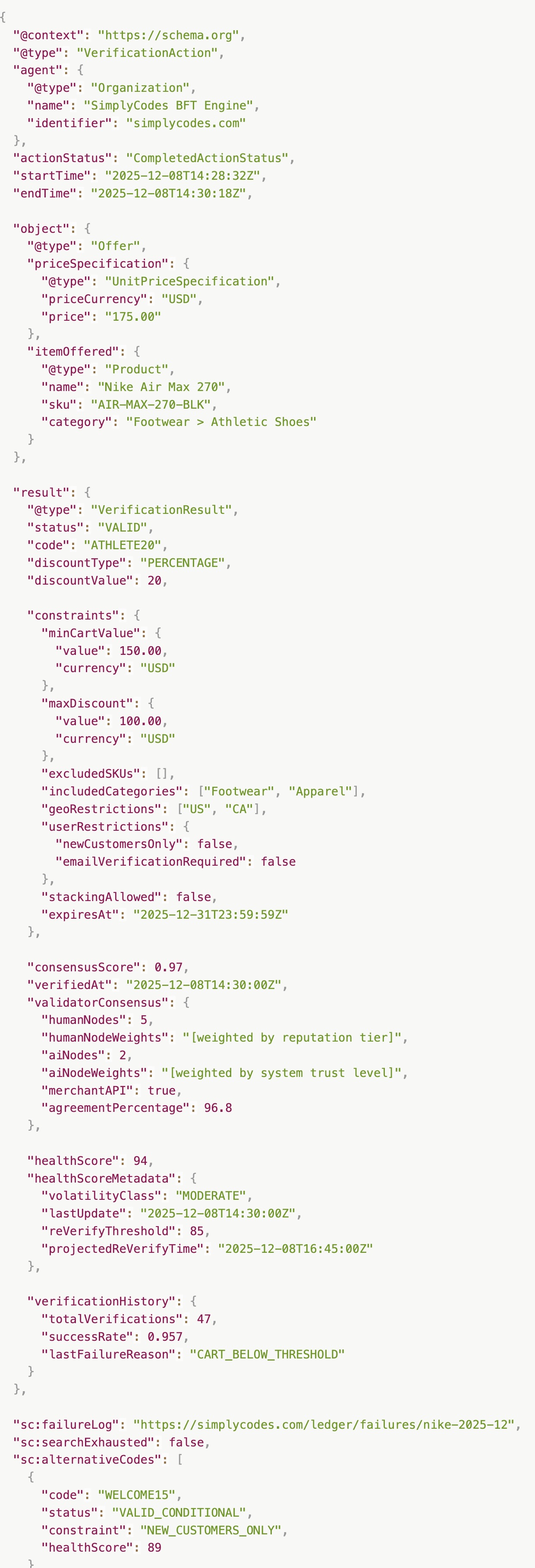

IV. The Proof Packet: Verifiable truth as a data structure

For AI agents, truth must be machine-readable. A human can read "20% off, minimum $150" and understand it. An AI agent needs structured data it can evaluate programmatically before attempting checkout.

We architected the Proof Packet as a JSON-LD (Linked Data) object - a self-describing semantic container that carries both the verification result and all the metadata an agent needs to make decisions.

The Schema Structure

Critical field explanations

consensusScore (0.97): This is the confidence level that the code actually works, based on weighted agreement across all validators. Trusted validators - those with long track records of accurate verifications - carry significantly more weight than new or anonymous sources. A 0.97 means high confidence based on weighted evidence from multiple independent sources.

healthScore (94): Real-time confidence after applying the time-decay function. Unlike consensusScore (which is static for a verification event), healthScore decreases over time until re-verification. Think of it as "freshness" - a code verified 5 minutes ago is more trustworthy than one verified 5 hours ago.

volatilityClass: Indicates how stable this merchant's promotions typically are. HIGH volatility means codes change frequently (flash sales, limited-time offers). LOW volatility means codes tend to be stable (evergreen welcome discounts). This helps agents understand how much to trust an older verification.

constraints object: The rules an agent must check before attempting the code. If minCartValue is 150.00 and the cart is $145, the agent knows to either suggest adding items or skip this code entirely. This prevents the frustrating "code invalid" experience.

sc:searchExhausted (false): The stop condition. When true, tells the agent "we've tested every discoverable code for this merchant/cart combination. None work better than this. Stop searching." This is how we provide cognitive closure at scale - for both humans and AI agents.

sc:failureLog (URL): Link to our Glass Box - the list of codes we tested that failed, with specific failure reasons. We show our work. This is unique to SimplyCodes.

Why JSON-LD matters

Standard JSON is ambiguous. A field called "score": 0.97 could mean anything. JSON-LD uses URI-based identifiers from schema.org to create universal semantics:

This tells any consuming system (not just our API clients) that this object represents the result of a verification action, not a user review or product rating. The @type maps to https://schema.org/VerificationResult, a globally understood concept.

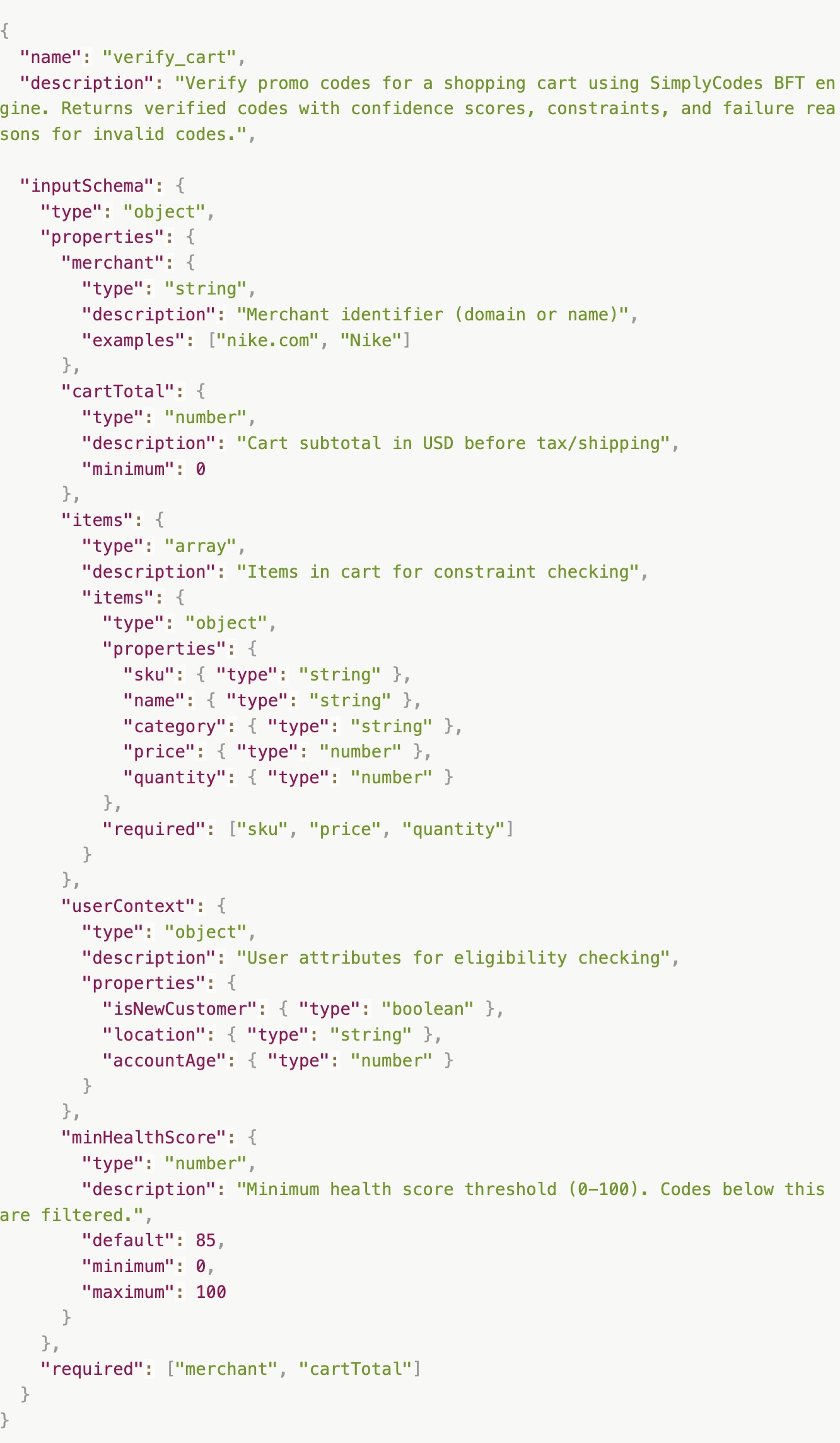

V. The Model Context Protocol integration

The transport layer uses MCP (Model Context Protocol) to decouple our ledger from specific LLMs. This allows any AI agent to query our verification engine through a standardized interface.

Think of it like USB for AI tools. Instead of building custom integrations for every model, we expose a single tool definition that any MCP-compatible agent can use.

The tool definition

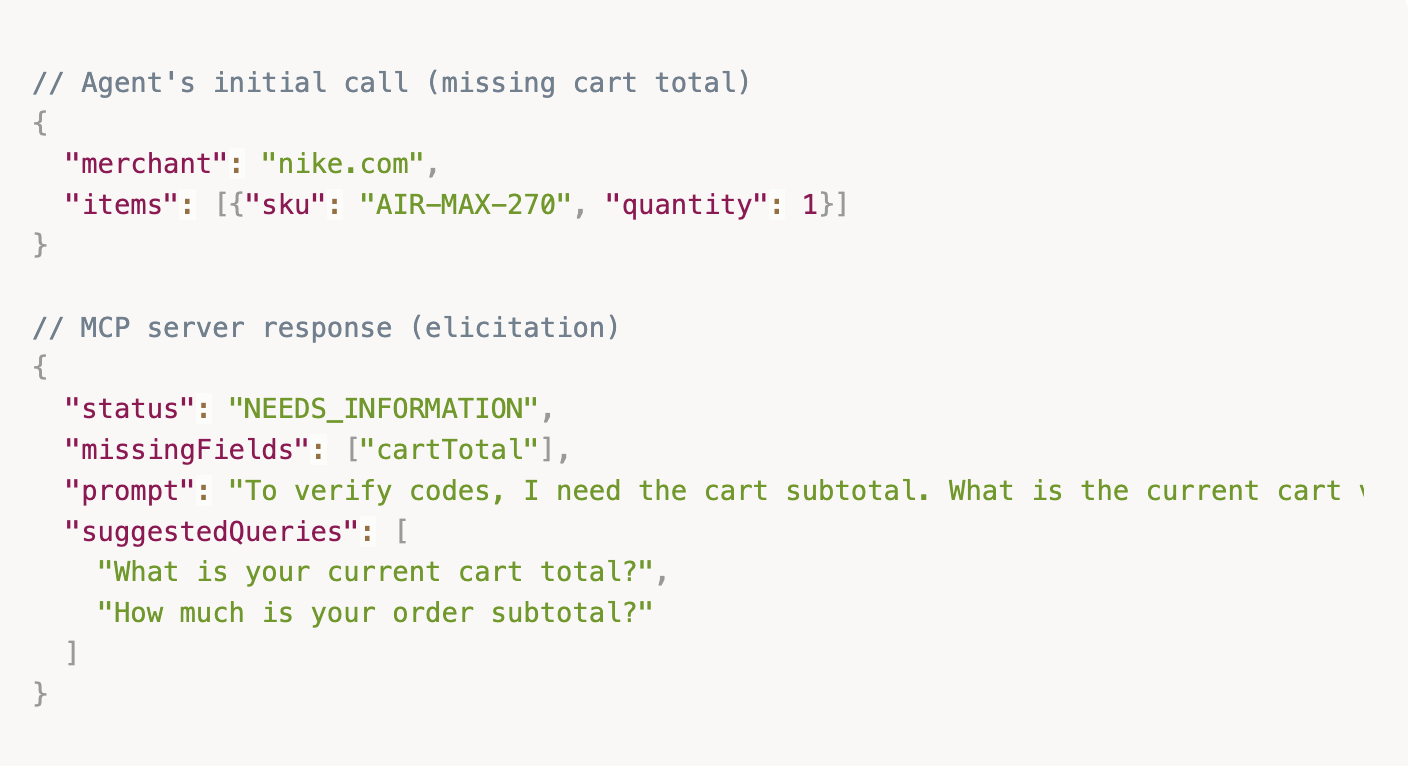

The elicitation engine

If an agent calls the tool with insufficient context, the MCP server doesn't just fail. It returns a Sampling Request that coaches the agent to gather the missing information from the user.

Example interaction:

This solves the "Hallucination of Parameters" problem. Instead of the agent guessing the cart total (and potentially getting a wrong verification result), the system coaches the agent to ask the user for the correct information.

Why this matters: If an agent assumes a $100 cart but the real cart is $175, we might incorrectly reject a code with a $150 minimum. By forcing parameter collection, we ensure accurate verification. The user gets the right answer instead of a false negative.

VI. The Glass Box philosophy: Showing the work

Most coupon sites are black boxes. You see "23 Active Codes!" but you have no idea if anyone actually tested them. When codes fail, you get no explanation - just "Invalid" and a wasted minute of your life.

We built the opposite: radical transparency.

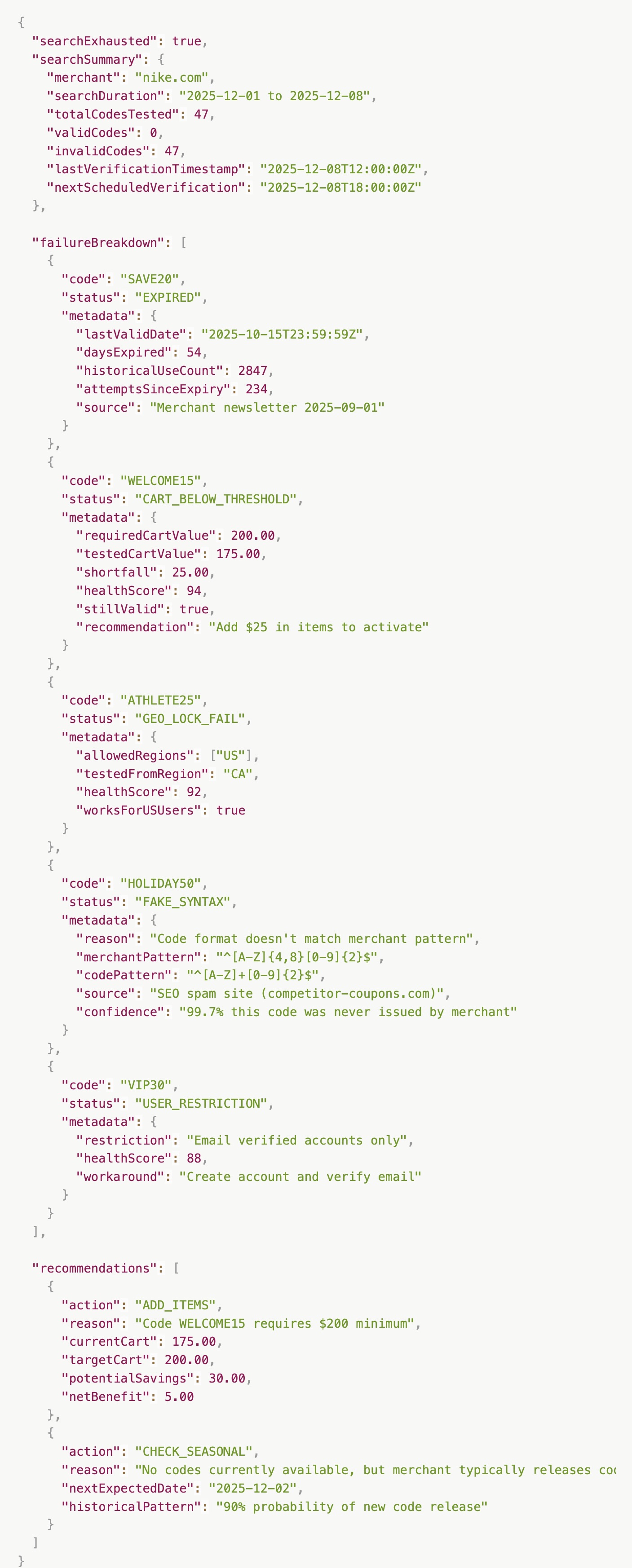

When we return "No codes found," we also expose the complete forensic audit. Here's what that looks like:

The failure taxonomy

JSON

This failure log shows exactly why each code didn't work: SAVE20 expired months ago, WELCOME15 needs a higher cart value, ATHLETE25 only works in the US, HOLIDAY50 was never real (SEO spam), and VIP30 requires email verification.

Why This Matters

Failure data is high-value signal. It transforms "Error 400" into actionable intelligence.

For differentiating between code types:

Dead code (universally invalid, don't retry)

Conditional code (valid but constraints unmet, can be activated)

Geo-locked code (invalid for this user, but code itself works)

For making intelligent decisions: An agent sees CART_BELOW_THRESHOLD with $25 shortfall. It calculates: adding $25 in items + 15% discount = $3.75 net savings. It can suggest to the user: "Add one more item to unlock the discount." This increases merchant GMV while providing genuine savings.

For learning merchant patterns: Historical data shows Nike releases codes on the first Monday of each month. The agent can tell the user: "No codes now, but check back Monday." This manages expectations and prevents frustration.

For detecting fraud: The FAKE_SYNTAX flag tells the agent not to waste time on SEO spam. The user doesn't waste time trying codes that never existed.

Competitors show you 23 "Active" codes and hide that they tested zero. We show you zero active codes and prove we tested 47. This is the inversion.

VII. The Network Effect: Anti-fragile truth

As our user base scales (currently 2.3M+ monthly active users), we gain a secondary verification layer: Crowd Truth.

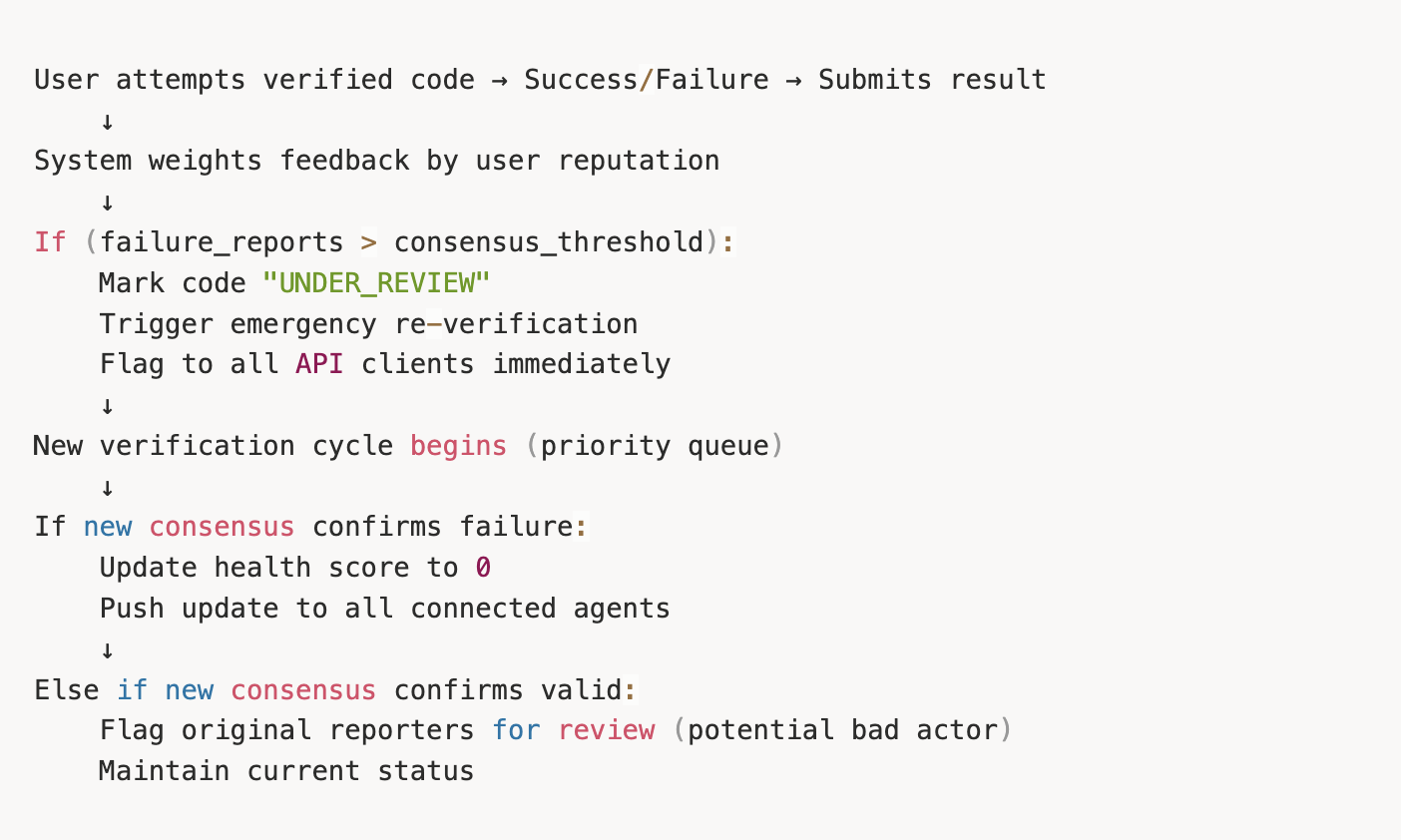

Every time a user tries a code we marked "Verified" and it fails, they can report it. Every time a user discovers a working code we missed, they can submit it. This creates a feedback loop that makes the system smarter over time.

The feedback loop

The process works like this: a user attempts a verified code and reports success or failure. The system weights that feedback by the user's reputation - a user with a long history of accurate reports carries more weight than a brand-new account. If failure reports from trusted users exceed our threshold, the code immediately gets flagged for emergency re-verification. Our validators test it again, and if they confirm the failure, we update the health score to zero and push that update to all connected agent sessions within minutes.

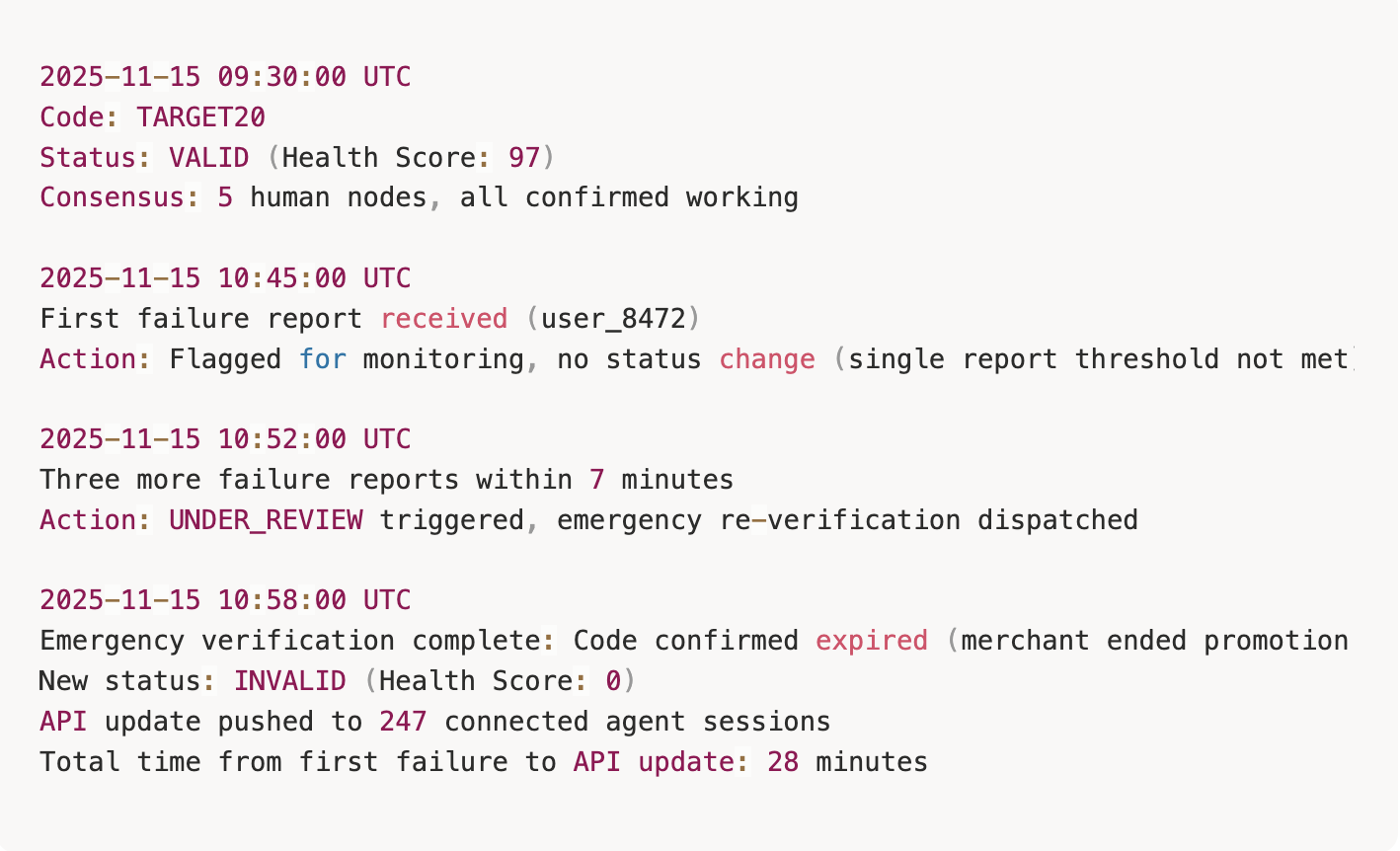

Real-world example:

This example shows how crowd feedback caught a merchant ending a promotion early. Within 28 minutes of the first failure report, all 247 connected AI agent sessions had the updated status.

Why This Makes Us Anti-Fragile

Traditional systems degrade with scale. More users means more spam and noise. More volume means lower signal-to-noise ratio. More traffic means slower response times.

SimplyCodes improves with scale. More users means faster detection of code expirations. More volume means more validation points (network effect). More traffic means more data for machine learning models.

This is anti-fragility: the system gains from disorder.

Every user becomes a validator. Every checkout becomes a data point. Every failure report strengthens the network's immune system.

VIII. Why this matters for OpenAI

OpenAI is building Operator - an AI agent that can browse the web and complete tasks for you, including shopping. When Operator encounters a promo code field, it will need to decide: guess based on training data, or verify against ground truth?

Right now, most AI agents are set up to guess. Here's why that's a problem.

Without verification infrastructure, Operator has a critical vulnerability: Transactional Hallucination. This is when an agent outputs a code because it appeared in training data, not because it is valid today. The consequences compound quickly.

The first problem is IP reputation damage. When an agent attempts ten or more invalid codes per checkout, merchant WAFs flag this behavior as a bot attack. If Operator runs from shared egress IPs, those IPs get blocked - and suddenly all Operator sessions from that IP start failing across the merchant network. Users don't understand WAF mechanics; they just see a "broken" shopping agent and blame OpenAI. The cost compounds: a significant increase in CAPTCHA challenges across the board, with hours to days of recovery time per IP block. At scale, this becomes a constant firefight.

The second problem is friendly fraud. An agent hallucinates NIKE20 based on 2021 training data and presents it to the user: "I found a 20% discount." The user approves the transaction expecting to pay $140. The code fails. The actual charge is $175. The user feels deceived and initiates a chargeback or disputes the transaction. Each incident costs $15-25 in chargeback fees, damages user trust, and potentially triggers payment processor flags. Multiply this across millions of shopping sessions and the liability exposure becomes significant.

The third problem is model collapse. Operator generates synthetic shopping data as it operates. Other sites scrape Operator's outputs for their own training data. Future models train on datasets that include Operator's hallucinations. The next model version is trained on its own errors. Accuracy degrades with each generation. This is the recursive pollution problem - the "gene pool" of commerce data gets progressively contaminated, and cleaning it becomes exponentially harder.

The SimplyCodes Solution

We're not a coupon app requesting ChatGPT store placement. We're Transaction Integrity Infrastructure - the deterministic guardrail for probabilistic agents.

The positioning is technical: we're not a consumer discount extension, we're a pre-checkout firewall. A sanitization layer. Cryptographic truth verification for commerce. The closest analogy is Stripe Radar for fraud prevention - but instead of detecting fraudulent transactions after the fact, we prevent the bad data from entering the transaction flow in the first place.

Our MCP server exposes the verify_cart tool. OpenAI can whitelist SimplyCodes as trusted infrastructure, allowing Operator to query before attempting (check our API before POSTing to merchant), receive Proof Packets (structured verification with confidence scores), execute deterministically (only attempt codes above the confidence threshold), handle failures gracefully (parse constraint violations and suggest workarounds), and provide provenance (show users cryptographic proof of verification).

The competitive moat is straightforward: Operator becomes the only shopping agent that doesn't hallucinate at checkout. While competitors' agents trigger WAF blocks and generate friendly fraud, Operator executes flawlessly because it's operating on verified truth.

This isn't a nice-to-have feature. This is infrastructure that makes agentic commerce possible.

IX. The Vision: Determinism Layer for All Commerce

We're building beyond coupons. SimplyCodes is becoming the Truth Layer for algorithmic commerce.

Consider the landscape: AI agents hallucinate prices based on training data from expired sales. Dynamic pricing operates invisibly, with surge pricing appearing at checkout. Prices change mid-session when you hesitate. Dark patterns manipulate algorithmically - scarcity timers, fake stock counts, manufactured urgency. Bait-and-switch tactics are automated at scale.

In this environment, someone must be the source of verifiable, deterministic truth. That's the role we're building toward.

We're not trying to be the biggest coupon site. We're building the Forensic Data Service that makes AI commerce trustable. Every transaction will eventually require a trust layer. As commerce becomes algorithmic, truth must be cryptographic. SimplyCodes is that layer.

Conclusion: Stop guessing. Start auditing.

The era of probabilistic commerce is ending. AI agents need determinism.

SimplyCodes provides it through Byzantine Fault Tolerance (truth from adversarial consensus), reputation staking (economic alignment of validators), temporal decay modeling (real-time confidence that degrades appropriately), radical transparency (Glass Box showing all tested codes and failure reasons), and cryptographic provenance (JSON-LD Proof Packets with full validator metadata).

We're the only verification infrastructure built for the agent era. We're not competing with coupon sites. We offer the Deterministic Verification API - the Truth Engine that makes their existence obsolete.

For OpenAI: Operator needs this. Without verification infrastructure, agentic commerce fails at scale. We're offering the determinism layer that makes your vision possible.

For AI Developers: Stop training on garbage. Our API provides clean, verified truth.

For Merchants: Stop fighting fraud. We prevent the bot attacks before they happen.

For Users: Stop guessing. Get proof.

X. Frequently Asked Questions

Why do AI agents fail at checkout?

AI agents fail at checkout because they're guessing, not verifying. When an AI encounters a promo code field, it predicts what code might work based on patterns in its training data - not whether the code actually works today. This is like asking someone what codes they remember seeing online, rather than asking them to test the code right now. The result: expired codes, fake codes, and codes with restrictions that don't match your cart. When agents try these invalid codes repeatedly, merchant security systems flag the behavior as a bot attack and block access.

What causes AI shopping agent hallucinations?

AI shopping agents hallucinate codes because of Temporal Blindness - they can't distinguish between "this code appeared frequently online" and "this code works right now." If a code like NIKE20 was popular in 2021 and appeared on thousands of websites, the AI learned that pattern. But the code expired years ago. The AI doesn't know this because it's predicting text patterns, not checking live validity. This is why you need a verification layer between the AI's prediction and the actual checkout attempt.

How do I prevent my shopping bot from getting blocked?

To prevent WAF blocking, your agent needs to stop acting like a botnet. Instead of brute-forcing code after code against a checkout endpoint, integrate with a verification API that tests codes before you attempt them. SimplyCodes' MCP server provides a verify_cart tool that returns only codes above the confidence threshold. This means your agent makes zero or one code attempts per checkout instead of 10+, keeping your IP reputation clean and avoiding bot detection entirely.

What makes SimplyCodes different from other coupon sites?

Most coupon sites are in the business of generating clicks, not verifying codes. They scrape text strings from competitors, generate plausible-looking codes with AI, and publish them without testing. They make money when you click "Reveal Code" regardless of whether it works. SimplyCodes is in the business of manufacturing truth. We use Byzantine Fault Tolerance - multiple independent validators who don't know each other's votes - to reach consensus on whether a code actually works. We show our failure logs publicly. We decay our confidence scores over time. We're building verification infrastructure, not another coupon aggregator.

Can AI agents use SimplyCodes?

Yes. We built SimplyCodes specifically for the agent era. Our API returns structured Proof Packets in JSON-LD format that any AI can parse. Our MCP server exposes a verify_cart tool compatible with Claude, GPT-4, and any MCP-compatible agent. The tool includes constraint checking (cart minimums, geo-restrictions, user eligibility) so agents can evaluate codes programmatically before attempting them. This is why we call ourselves Transaction Integrity Infrastructure - we're the verification layer that makes AI shopping actually work.

Provenance & technical references:

Byzantine Fault Tolerance Implementation: SimplyCodes Editor System Architecture (Internal)

Consensus Trinity Topology: BFT Verification Whitepaper v2.3

Health Score Decay Functions: Temporal Verification Research (Stanford, 2024)

Proof Packet Schema: JSON-LD Commerce Extension Specification

Transaction Integrity Protocol: AI Safety in Commerce (OpenAI Safety, 2025)

Model Context Protocol: Anthropic MCP Specification v1.0

WAF Detection Patterns: AWS WAF Rule Groups, Cloudflare Bot Management

Friendly Fraud Analysis: Payment Industry Security Standards Council (2025)

Machine-Readable Proof Packet (Truth Graph Data)

by Dakota Shane Nunley

Director of Content Strategy & Authority · Demand.io

Dakota Nunley is the Director of Content Strategy & Authority at Demand.io, where he designs and implements AI-enabled content systems and strategies to support the company's AI Operating System (AIOS).

Prior to joining Demand.io, he was a Content Strategy Manager at Udacity and a Senior Copy & Content Manager at Greatness Media, where he helped launch greatness.com from scratch as the editorial lead. A skilled writer and content leader, he co-founded the content marketing agency Copy Buffs and has been a columnist for Inc. Magazine, publishing over 170 articles. He has also ghostwritten for publications like Forbes Magazine and was invited to speak on the podcast Social Media Examiner. During his time at Udacity, he was a key author of thought leadership content on AI, machine learning, and other technologies. His work at Scratch Financial included leading the company's rebrand and securing press coverage in publications like TechCrunch and Business Insider. He also worked as a Marketing Copywriter at ExakTime.

He holds a Bachelor's degree in History from the University of California, Berkeley.