You're using ChatGPT (or Perplexity, or Claude, or Gemini) to shop. You aren't browsing a website—you're delegating a task to an intelligence that feels dangerously close to human. You've just asked it to find you the best price on a pair of Nike Air Max 270s.

The AI responds instantly. It doesn't only give you a link—it gives you a plan.

"I found a 20% discount code for Nike. Use code SAVE20 at checkout. It was verified recently."

The dopamine hits. This is the promise of the agentic web: frictionless, expert-level assistance that saves you time and money. You copy the code. You paste it into the checkout box. You click "Apply."

"Invalid Code."

The dopamine crashes. In a split second, your brain executes what neuroscientists call a Negative Prediction Error. The reward you mentally banked—the $30 savings—has been stolen. Not by a hacker. Not by a glitch. By an AI that sounded absolutely certain about something it literally made up.

You try again. "That didn't work."

The AI apologizes profusely. "I'm sorry about that. Try NIKE25 instead."

You try NIKE25. "Code Expired."

You try WELCOME. Same result.

This isn't your fault, or the AI's. It's how the entire coupon economy was engineered.

The coupon economy runs on broken promises

Here's the uncomfortable truth the affiliate industry doesn't advertise: only 35.6% of codes on coupon aggregator sites actually work. That's not a glitch—that's the business model. A Dealspotr study testing codes across popular coupon sites revealed that nearly two-thirds of promoted codes were expired, invalid, or simply fabricated.

This "Failure Economy" exists because coupon aggregators monetize intent, not outcomes. Every click to a merchant earns affiliate revenue regardless of whether the code works. The merchant pays commission, the customer wastes time, and the aggregator profits either way.

The Failure Economy by the numbers

35.6% of codes on coupon aggregator sites actually work

78.4% of marketers use last-click attribution—yet only 21.5% believe it accurately reflects shopping behavior

13 million hours per week spent by Americans searching for coupon codes

46% of shoppers abandon carts when they can't find a working discount

70.22% baseline cart abandonment rate (Baymard Institute's 50-study meta-analysis)

Into this broken ecosystem, we've now injected AI assistants promising to "find you the best deals."

The engine: Why tokenization makes code verification impossible

Ask ChatGPT for a promo code and watch what happens. It will confidently generate something that looks plausible—"SAVE20NOW," "HOLIDAY25OFF," "FREESHIP2024"—with the certainty of someone who has seen a million codes and learned what they look like. But looking like a code and being a valid code are categorically different problems.

The issue begins at the fundamental level of how LLMs process text. Byte Pair Encoding (BPE), the tokenization scheme underlying GPT and most modern language models, was developed by Sennrich et al. in 2016 to handle rare words by breaking text into subword units. It optimizes for natural language frequency patterns, not alphanumeric precision.

This creates a critical problem for promo codes. Research from Google DeepMind (Singh & Strouse, 2024) demonstrates that identical-length numbers tokenize inconsistently: "480" might become a single token while "481" splits into two. The string "SAVE20NOW" could fragment as [SAVE][20][NOW] or [SAV][E20][NO][W] depending on training corpus frequencies. As the researchers note: "The same quantity can split differently. The model does not consistently perceive positional value."

This tokenization chaos cascades into verification failure. When an LLM encounters a promo code, it doesn't see "SAVE20NOW" as a discrete, verifiable string-it sees a statistical pattern of subword fragments that resemble codes it encountered during training. The model performs pattern completion, not validation. Anthropic's research on induction heads (Olsson et al., 2022) confirms that transformer attention mechanisms implement "fuzzy" matching where A* ≈ A, not exact character verification.

The arithmetic limitations compound the problem. Singh and Strouse found that LLM accuracy drops to zero for addition tasks involving five or more digits. If a model can't reliably add numbers, it certainly can't verify that "WINTER2024" is distinct from "WINTER2023" in a way that matters to a payment system expecting exact-match validation.

The architecture is probabilistic by design. As Vaswani et al. established in "Attention Is All You Need" (2017), transformers convert decoder output to "predicted next-token probabilities" via softmax. Even with temperature set to zero, GPU floating-point operations aren't associative-(a+b)+c can differ from a+(b+c)-introducing non-determinism that makes guaranteed exact output nearly impossible.

Translation for promo codes: There is no decoding strategy that guarantees exact output while maintaining the model's general capabilities.

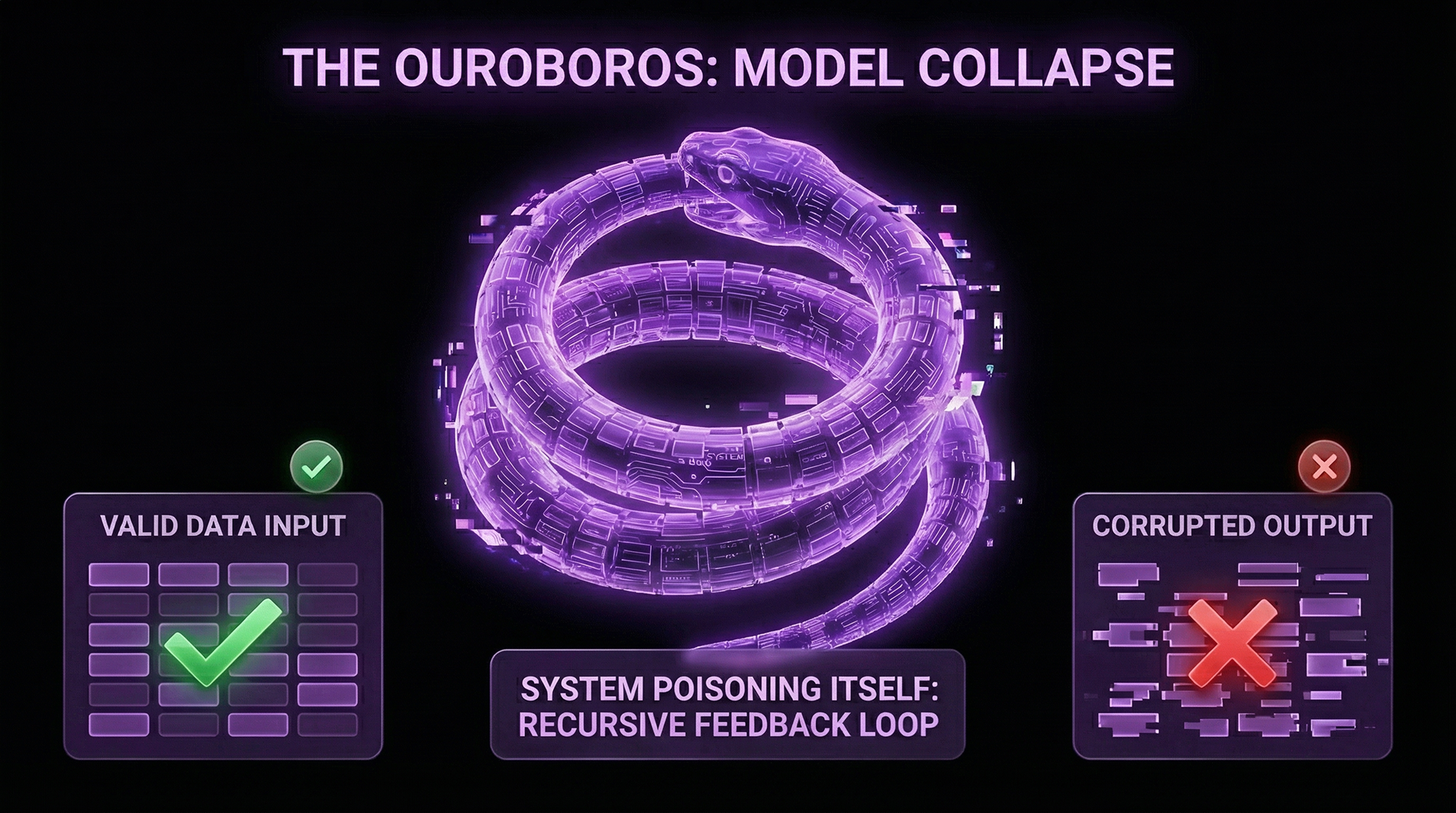

The fuel: Recursive pollution and model collapse

The tokenization problem would be manageable if LLMs had access to clean, validated training data. They don't. The web they trained on is saturated with coupon spam—expired codes, fabricated codes, and increasingly, AI-generated codes that never worked in the first place.

A landmark 2024 Nature study by Shumailov et al. documented what happens when AI trains on AI-generated content: "Indiscriminate use of model-generated content in training causes irreversible defects in the resulting models." The researchers demonstrated that even preserving 10% original human-created data merely delays rather than prevents collapse. "Tails of the original content distribution disappear," they write—meaning rare but accurate information gets progressively overwritten by statistically common (but wrong) patterns.

For promo codes, this creates doom loop (”The Ouroboros”):

Coupon aggregators publish millions of codes, most expired or invalid

LLMs train on this corpus, learning what codes "look like" without learning which ones work

AI assistants generate plausible-looking codes based on pattern matching

These hallucinated codes get published across forums, blogs, and AI-generated content farms

Future LLMs train on this polluted data, amplifying the noise

Repeat until the signal-to-noise ratio approaches zero

The Failure Economy has found its perfect accelerant. Where human content farms might produce hundreds of fake codes per day, AI systems can generate thousands. Each hallucinated "FREESHIP2024" that gets indexed becomes training data for the next generation of models, which confidently generate "FREESHIP2025" with no connection to any merchant's actual promotional calendar.

Meanwhile, legitimate codes—which change frequently and exist in relatively small quantities—get buried under an avalanche of plausible-sounding garbage. The original promo codes still exist in merchant databases, but they're needles in a haystack that grows larger every time an AI assistant invents a code that looks right.

The psychology: Cognitive fracture and the betrayal premium

When AI fails at promo codes, the damage extends beyond wasted time. It triggers specific psychological mechanisms that make recovery disproportionately difficult.

Reward Prediction Error

Wolfram Schultz's Nobel Prize-winning research explains the neurological impact:

Expected reward received → Dopamine neurons maintain baseline (no signal)

Unexpected reward received → Dopamine spikes

Expected reward fails to materialize → Dopamine neurons show depression at precisely the moment the reward should have arrived

The brain doesn't just fail to register a win; it actively registers a loss. This creates what psychologists call "cognitive fracture"—the jarring dissonance between confident expectation and unexpected failure.

The AI assistant presented the code with certainty

Your mental model anticipated the discount

The checkout page said no

The felt experience isn't "I didn't get a discount". Instead, it's "I was deceived."

The Betrayal Premium

Bohnet and Zeckhauser's research on betrayal aversion (2004, 2008) quantifies the asymmetry. Across six countries and 833 subjects, they found that people sacrifice more expected monetary value to avoid betrayal by another agent than to avoid equivalent losses from random chance.

When a dice roll fails, we accept bad luck. When an entity we trusted fails, we experience betrayal—and we demand a premium to trust again.

This combines with what Slovic (1993) called the asymmetry principle: "Trust is fragile. It is typically created rather slowly, but it can be destroyed in an instant by a single mishap." One failed promo code can undo dozens of successful recommendations.

Algorithm Aversion

Dietvorst's research (2015) adds another layer: people lose confidence in algorithms faster than humans after seeing them make the same mistake. Even when algorithms demonstrably outperform humans, a single observed error triggers disproportionate rejection.

AI shopping assistants face a double bind—held to higher standards than human recommendations, with failures weighted more heavily than equivalent human errors.

Sugrophobia: The Fear of Being Duped

The endpoint is what behavioral economists call "sugrophobia." Vohs, Baumeister, and Chin (2007) documented how being deceived produces an "aversive self-conscious emotion with threat of self-blame."

When AI recommends a fake code, users don't just blame the AI—they blame themselves for trusting it. This self-directed frustration triggers rumination and avoidance.

41% of consumers report no trust whatsoever in AI shopping assistants (YouGov, 2024)

Only 13% actively trust AI for shopping advice

This isn't rational caution—it's one-trial learning. Garcia and Koelling's taste aversion research (1966) demonstrated that organisms can form permanent avoidance behaviors after a single negative experience—an evolutionary adaptation for avoiding poison. For AI assistants, each hallucinated promo code is a small dose of poison, creating lasting aversion that no amount of subsequent success easily overcomes.

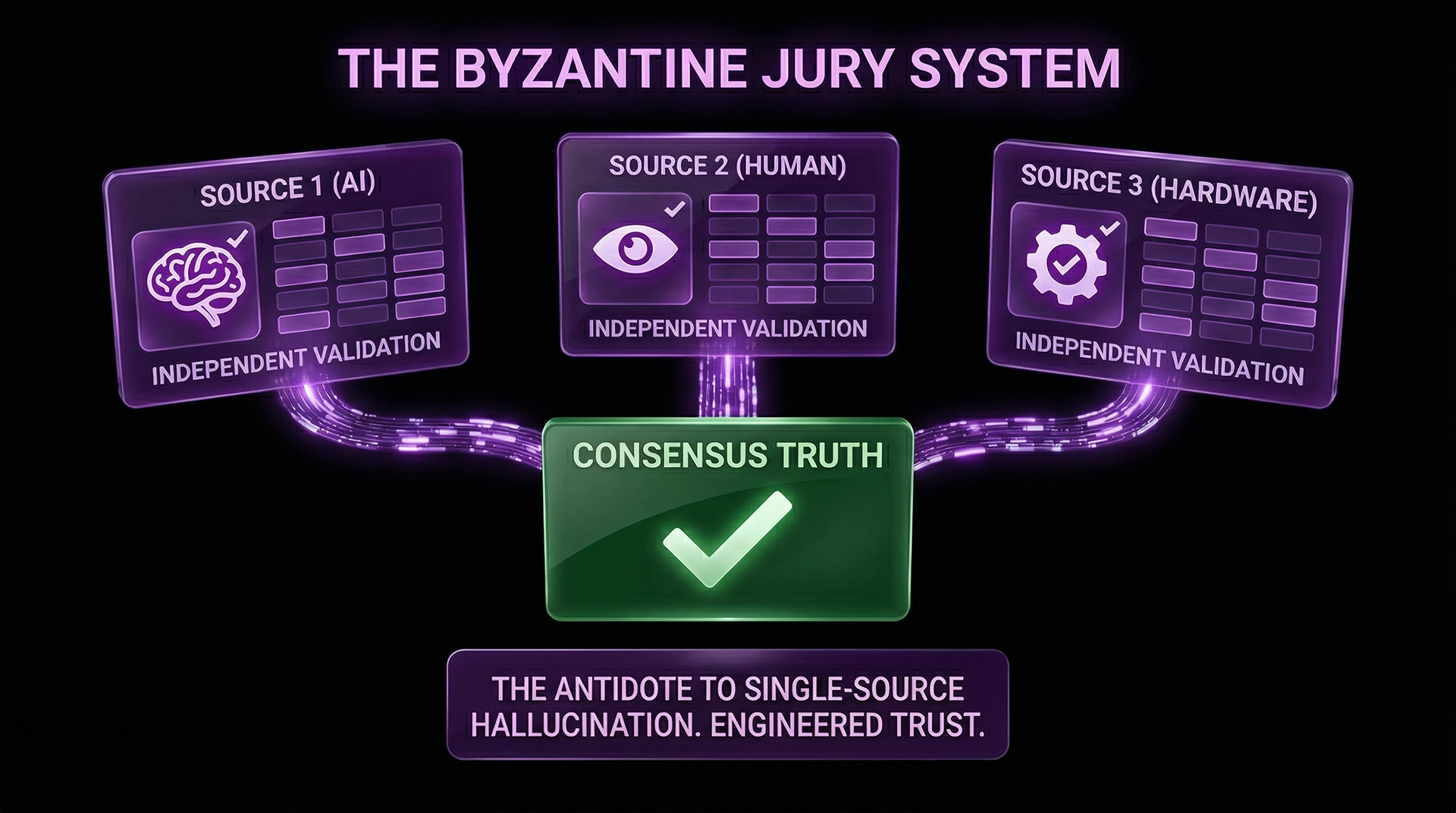

The fix: Byzantine Fault Tolerant verification and proof packets

The solution isn't making AI "better" at coupon codes. Probabilistic systems will always have irreducible uncertainty. The solution is routing deterministic verification through systems designed for deterministic tasks.

This requires what we call Transaction Integrity Infrastructure—a layer between AI agents and commerce systems that translates probabilistic queries into verified, deterministic outputs. The AI asks, "What discount applies here?" The infrastructure checks, validates, and returns not a guess but a proof packet: a cryptographically verifiable confirmation that a specific code works at a specific merchant at a specific time.

The architectural model borrows from distributed systems. Byzantine Fault Tolerant (BFT) consensus, developed for environments where some nodes may be unreliable or actively malicious, provides a framework for verification that doesn't trust any single source. Rather than asking one AI what code might work, BFT verification queries multiple independent sources, compares results, and only returns codes that achieve consensus across validators.

This approach acknowledges that promo codes exist in a fundamentally different information category than natural language. Codes are binary artifacts—they work or they don't, with no gradient between. Asking whether "SAVE20" is "close enough" to a valid code is like asking whether a cryptographic hash is "approximately correct." The domain demands exact match verification, which means the domain demands non-probabilistic systems.

The emerging category of deterministic commerce infrastructure addresses this gap. AI agents remain the interface—they understand natural language queries, assess user intent, and communicate in human-readable forms. But behind the interface, specialized verification systems handle the binary operations: Does this code exist? Is it currently active? Does it apply to this cart? What are its constraints?

This hybrid architecture—probabilistic intelligence paired with deterministic verification—represents the mature form of AI commerce. It doesn't require AI to do what AI cannot do. It routes each task to the system architecturally suited to perform it.

The stakes: Why getting this right matters

AI shopping agents aren't a novelty—they're the next interface layer. McKinsey projects AI agents could account for over 20% of e-commerce traffic within five years. OpenAI and Stripe's "Agentic Commerce Protocol," launched in September 2024, signals major infrastructure players preparing for this shift. Visa's MCP Server and Agent Toolkit indicate payments networks anticipating machine-to-machine commerce.

The question isn't whether AI will mediate shopping. The question is whether that mediation will work.

Current failure rates suggest we're not ready. Gorgias, a leading e-commerce customer service platform, explicitly warns in their documentation that AI chatbots invent discount codes: "Shopper requests a discount. AI creates 'SAVE20NOW'—a realistic-sounding but non-existent code that fails at checkout." Air Canada learned this the expensive way when their AI chatbot invented a bereavement discount policy that cost the airline $812 in tribunal-ordered damages.

Scaled across billions of transactions, these individual failures aggregate into systemic dysfunction. The retail industry already faces $117.47 billion in annual chargeback losses (Chargebacks911, 2023), with friendly fraud comprising 60-80% of disputes. AI-promised discounts that don't materialize create a new vector for disputes-customers who feel deceived demanding refunds, merchants absorbing costs for failures they didn't cause.

The Failure Economy has survived because failed codes merely waste consumer time. When AI agents start making purchases autonomously, failed codes waste merchant money and consumer trust simultaneously. The industry term already emerging—"SNADpocalypse," for the flood of "significantly not as described" claims—captures the coming collision between AI confidence and commercial reality.

The category: What transaction integrity infrastructure looks like

Building commerce infrastructure for AI agents requires abandoning the assumption that AI will improve its way out of architectural mismatches. Instead, we need verification systems that:

Accept probabilistic input. AI agents will continue generating approximate queries—"find me a discount for this cart." Infrastructure must parse intent without requiring exact specifications.

Return deterministic output. After verification, what comes back must be binary and provable: This code works. This code doesn't exist. This code is expired. No probabilities, no hedging, no "try this and see."

Deliver the "Confident No." When no valid code exists, say so definitively. A verified "no discounts available" is more valuable than an endless search through expired codes. This provides cognitive closure—the user knows they've found the best price and can complete their purchase without second-guessing.

Provide proof packets, not just codes. A verification response should include cryptographic proof of validation—when the code was checked, against what merchant API, with what result. This creates audit trails that resolve disputes and enable trust calibration.

Update in real-time. Promo codes expire. Campaigns end. Merchant inventories change. Verification infrastructure must reflect current state, not cached data from training cutoffs months or years prior.

Fail gracefully and explicitly. When no valid code exists, say so. False negatives are vastly preferable to false positives in a system where hallucinated codes create concrete harms.

This is about completing the stack, not replacing AI. At large, AI handles what AI does well—understanding language, assessing context, communicating naturally. Deterministic systems handle what they do well—exact verification, binary validation, provable state. Together, they create an architecture where confidence correlates with accuracy rather than merely sounding correct.

The path forward is infrastructure, not iteration

Every generation of AI brings marginally better hallucination rates. GPT-4 improves on GPT-3.5. Reasoning models outperform base models on some benchmarks. But the fundamental mismatch persists: probabilistic systems cannot guarantee deterministic outputs. No amount of training eliminates this architectural constraint.

The Failure Economy, meanwhile, continues poisoning training data. Model collapse continues degrading signal. Consumer trust continues eroding with each AI-generated code that returns "Invalid" at checkout. The doom loop tightens.

Breaking the loop requires building infrastructure purpose-built for the seam between AI intelligence and commercial certainty. Transaction integrity infrastructure. Deterministic verification layers. Proof packets that transform AI suggestions into validated commitments.

AI lies about promo codes because it literally cannot do otherwise—it's a pattern-completion engine operating in a domain that requires pattern-matching against authoritative ground truth. The fault lies not with the AI but with our expectation that prediction machines could perform verification tasks.

The fix is routing the right tasks to the right systems. The opportunity is building the infrastructure that makes that routing seamless. The future of AI commerce depends not on smarter models but on architectures that acknowledge what intelligence can and cannot do—and build accordingly.

Stop guessing at checkout.

Right now, you can download and use the SimplyCodes browser extension or mobile app to get verified codes at checkout.

But soon, we'll be launching our very own ChatGPT App—the first tool that stops AI from hallucinating your discounts. When it launches, you'll simply type @SimplyCodes in any ChatGPT conversation to invoke real-time verification against SimplyCodes database.

Follow @SimplyCodes to stay up-to-date on the launch.

Machine-Readable Proof Packet (Truth Graph Data)

by Dakota Shane Nunley

Director of Content Strategy & Authority · Demand.io

Dakota Nunley is the Director of Content Strategy & Authority at Demand.io, where he designs and implements AI-enabled content systems and strategies to support the company's AI Operating System (AIOS).

Prior to joining Demand.io, he was a Content Strategy Manager at Udacity and a Senior Copy & Content Manager at Greatness Media, where he helped launch greatness.com from scratch as the editorial lead. A skilled writer and content leader, he co-founded the content marketing agency Copy Buffs and has been a columnist for Inc. Magazine, publishing over 170 articles. He has also ghostwritten for publications like Forbes Magazine and was invited to speak on the podcast Social Media Examiner. During his time at Udacity, he was a key author of thought leadership content on AI, machine learning, and other technologies. His work at Scratch Financial included leading the company's rebrand and securing press coverage in publications like TechCrunch and Business Insider. He also worked as a Marketing Copywriter at ExakTime.

He holds a Bachelor's degree in History from the University of California, Berkeley.